Virtue is a Vector

Treating things as good vs bad is too simple.

People like to categorize things as being good or bad. Motherhood and apple pie are good. Ebola is bad. Kittens are good. Hitler was bad.

Sometimes this is a reasonable model, but a lot of the time this “good/bad” framework breaks down. Because pretty much everything we think of as “good” also does some form of harm, and most “bad” things are also beneficial in some way.

Animal testing allows the development of life saving drugs, but also causes torment to animals. The Black Death caused the death of millions of people, but also led to the end of feudalism and a cultural bloom. Nuclear power offers the promise of near limitless cheap sustainable power, but also risks spreading nuclear weapons. Social media has amplified polarization and destabilized society, but has also helped people connect and share knowledge. Google Search gives everyone easy access to knowledge, but places huge cultural power in the hands of a single company. Prescription opioids allow people with medical conditions to live with vastly less pain, but have also contributed to a crisis of addiction. Kittens are cute, but they also kill birds.

When we try to use the good/bad framework, we lose opportunities to build better products, to have better government policies, and to relate better to other people.

In this essay I argue that we that we should instead think of virtue as being a vector, where the vector’s different dimensions are the ways in which it is good or bad. This gives us a framework that allows us to think much more crisply about things like trade-offs, diminishing returns, harm-mitigation, different moral frameworks, and situational context.

This essay is part of a broader series of posts about how ideas from tech and AI apply to broader society, including The Power of High Speed Stupidity, Simulate the CEO, Artificial General Horsiness, and Let GPT be the Judge. If you like this stuff then I encourage you to subscribe, because I expect to write more posts on this theme.

As my doctor once told me, there are two kinds of medicines: the ones that don’t work, and the ones that have side effects.

The reason we take our medications is because we expect the “good” from the benefits to exceed the “bad” from the side effects. But that is only true if we take the right dose and we have the condition the drug is intended to treat. One Tylenol tablet will cure my headache. Twenty will kill me. A Tylenol a day even when I don’t have a headache will gradually destroy my liver.

Rather than thinking of Tylenol as being good or bad, we should think of it as a vector.

A vector describes something by scoring it in multiple dimensions. In physics, you might describe an object's position using a vector that gives x, y, and z coordinates. In AI, you might describe a bear as being an object that has high “aliveness”, “scariness”, “fatness”, and “cuddliness”.

A vector can also describe a movement from one position to another. In physics, you can describe the velocity of an object by saying how much it will change its x, y, and z position by going at that speed for a second. For Tylenol, we can model its effect on the body by how one tablet changes your pain level, and how much it changes your liver stress level.

One of the nice things about vectors is that you can add them together. If I move two steps right and one step back, and then move two steps forward and one step left, I end up having moved one step right and one step forward.

The same principle applies to virtue vectors. If you want to do something that contains a mix of good and bad, it often makes sense to combine it with something else that counterbalances the bad.

If I put my clothes in a washing machine, they become clean, but also become wet. But if I combine my washer with a dryer, I end up with clothes that are clean and dry. Notably, both the washer and the dryer are net-harmful when used alone. If I only use a washer, the wetness of my clothes outweighs their cleanness, and if I only use a dryer, the electricity cost outweighs making my already dry clothes marginally drier.

When I launched featured snippets at Google Search, we allowed users to answer questions much faster than before by showing direct answers to their questions, but we also slowed down the results page, making search generally less pleasant to use. Fortunately, we were able to combine the featured snippets launch with other changes that improved the speed of Google Search, resulting in a combined change that was clearly net positive.

One of the principles of neoliberalism is to use loosely guided free markets to create a vibrant economy, and counter-balance the resulting inequality with progressive taxation and support programs to help those who lose their jobs through creative destruction.

So often it doesn’t make sense to think about whether it is good to do something in isolation, because things are rarely done in isolation. Instead you should think about whether there is a combination of things that can be done together that will lead to a good outcome, in the particular situation you are in.

Most things in life have diminishing returns. Having more of them is good up to a certain point, and then improving them matters less and less until you really don’t care any more.

If I wash my clothes a thousand times they won’t be much cleaner than if I wash them once. If I water a plant with a thousand gallons of water, it won’t grow any better than if I gave it the correct amount. If I exercise for ten hours a day, I won’t live any longer than if I exercise for one hour a day. If I have a billion dollars, I won’t have a significantly higher quality of life than if I have a million dollars. If you blow up your house with a million tons of TNT it’s not notably more destroyed than if you blew it up with one ton of TNT.

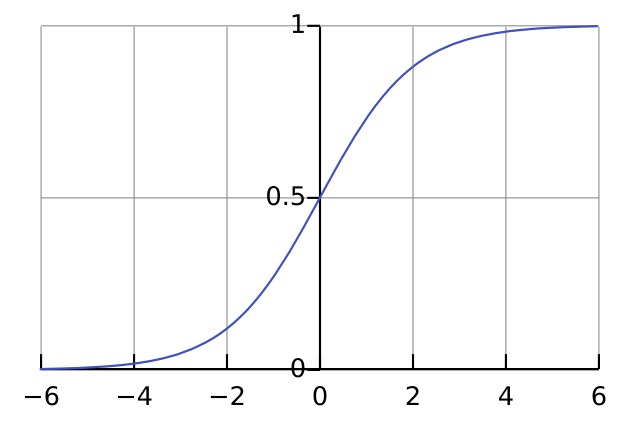

In AI it’s common to model this using a sigmoid function, also known as an S-curve:

Nature abhors a vacuum, but nature loves an S-curve. If you aren’t sure how a system will respond to some input, it’s usually safe to assume it follows an S-curve, or at least some region of an S-curve. If a relationship looks linear, it’s probably the middle of an S-curve. If it looks exponential, it’s probably the left hand side of an S-curve. But pretty much everything hits the right hand side of the S-curve eventually. Diminishing returns are a near-universal fact of life.

So if you have some input value (e.g. how many times you washed your clothes) and want to turn it into a meaningful outcome (e.g. how clean your clothes are), then a good approach is typically to apply an S-Curve function to it. To make sure you get the right part of the S-Curve, you can first multiply the input number by a weight (one hour of washing is different to one minute of washing), and add a bias (where you start on the curve depends on how dirty your clothes were at the beginning).

How do you know what the weight and bias numbers should be? We’ll get to that later.

I said before that it wasn’t useful to give things a single value of goodness or badness, because everything is a combination of goodness and badness.

However, what is useful is to give a single goodness score to a combination of things that you might do in combination in a particular situation. You need to have such a score in order to compare different options of what you should do.

People will of course disagree about how to balance all these good/bad things to make a single score. We’ll get to that later, but to begin with, let’s imagine we just want to create a single score that represents the total “goodness of the world” after some combination of actions has been performed..

The first step is to create a vector that describes the state you’d expect to end up in if you started with the current state of the world, and applied a combination of actions. The simplest way to do this is to take a vector describing the current situation (e.g. how dirty are my clothes, how low is my crime level, how hungry am I) and then simply add the vectors representing all of the actions that I propose doing. This will give you a vector representing the expected value of all these things after I’ve done all these actions.

Now I’ve got a single vector, which is progress, but I still need to turn it into a single “goodness score” number in order to compare it against other options.

One common way to do this in AI is to apply an S-curve to each of the dimensions individually (to take account of diminishing returns), multiply each of the dimensions by a weight that specifies how much you care about that dimension, and then sum them all together. E.g. maybe I care a thousand times as much about whether I’m about to die from liver failure as whether my headache has gone away.

In practice you typically need to do something a bit more complex than this, because the different dimensions of goodness aren’t independent. For example I might have several dimensions about my clothes, saying how wet they are, how dirty they are, and how destroyed they are, but if my clothes are wet then it doesn’t matter much that they are dirty because I can’t wear them. You can deal with this by grouping together the things that are similar, squashing them all together with another sigmoid, and then feeding them into a higher level weighted sum. Take this far enough and you essentially end up with a neural network.

In AI, this function that maps a multi-dimensional vector to a single “goodness” value is known as a value model.

But how do you know what the correct value model is? How do you know how to weigh the importance of your clothes being wet vs clean, or search being fast vs relevant, or a city being safe vs free?

The short answer is that this is often really hard. Often when people disagree about the correct thing to do, it isn’t because one group wants good things and one group wants bad things, but because they disagree about how to weigh one set of good things against another set of good things.

But there are some things we can try, and which often give good results.

One approach is to build a value model that predicts some external source of truth. For example you can try to predict a citizen’s self-reported happiness from various measures of their well being. Or you can look at the historical record and create a value function that predicts long term health of a city, given their scores ten years earlier. Or you can build a value function that predicts actions that are infrequent but important, such as users leaving your product, or upgrading their membership package.

Or you can come up with a “basket” of multiple such value functions, grounded in different external sources of truth. If the numbers disagree, then you don’t just treat one of them as being more authoritative, but you treat the fact they disagree as a motivation to think deeply about what is going on, and how to improve your analysis.

Or you can divide your system into several different parts, run them with different value functions, and see which ends up leading to better results over a longer period of time. This is the essence of pluralism.

But you’ll never really know for sure that you have the right value function, and you’ll often find out that you’ve been making the world worse when you thought you were making it better. Life is messy and to some extent you just have to deal with that.

It’s important to realize that the ideal value model depends on the situation you are in. A common mistake in both tech and life is to use a value model that made sense in a different product or society, but doesn’t make sense in this one. In essence, you have a value model that did well at solving the problems you used to have, but not at solving the problems you have right now.

Facebook fell into this trap a few years ago, when they had a value model that optimized for short term engagement. This worked great for many years, but eventually caused people to shift their attention away from their friends, and towards more engaging content created by publishers (particularly video). This then weakened the incentive for people to create new posts (since nobody was reading them), and risked breaking Facebook’s network effects.

The same problem can occur in society, where a society gets stuck with a moral system that was well suited to the place and time when it originally appeared, but is poorly suited to the challenges the society needs to deal with right now. Similarly, people in one social group should be mindful of the fact that the moral principles that work best for their group today may not be those that work best for a different group or for people living in a different time.

The solution to this isn’t to give up on value models (or moral principles) all together, but to always be aware that the model you have might be wrong.

I used to run a debate club in San Francisco. One of my jobs as leader of the debate club was to find people who could speak both for and against whatever proposition we were debating that day. If I couldn’t find anyone willing to debate a topic, then I’d often fill in myself. One consequence of this is that I found myself almost exclusively arguing for the opposite opinion to the one I actually held.

What I found was that it was always possible to create a compelling data driven argument in favor of any position that is believed by a non-trivial set of people. In every case there were clear reasons why what they wanted would have good outcomes, and why what their opponents wanted would have bad outcomes.

When people disagree, it is almost never because one group is good people who want good things, and the other group is bad people who want bad things. It is almost always because there are two groups who both want more good things and less bad things, but they disagree about how to weigh the relative importance of different ways that things can be good or bad.

Animal rights protestors don’t want to prevent the development of new medicines, and medical researchers don’t want to torture bunnies. Nuclear advocates don’t want to spread nuclear weapons, and nuclear opponents don’t want to prevent renewable energy. Gun rights supporters don’t want to kill school children, and gun control advocates don’t want totalitarian government.

There probably are some people who are motivated by bad intentions like sadism and selfishness, but this is far rarer that people like to imagine. Everyone thinks they are the good guys, but everyone is using a different value model to tell them what “good guys” means.

The more we understand the complexity of what it means for things to be good, and the difficulty of knowing you really have the right value model, the better we’ll be able to relate to each other.

Everything good is also bad. Almost everything bad is also good. This means that we constantly have to deal with tricky trade-offs and tensions when deciding what actions we should or shouldn’t do. This is true in life, in society, product development.

It’s tempting to simplify the world by classifying things as good or bad, but I think that model is too simple, and often leads to poor decision making and unnecessary conflict.

Treating virtue as a vector is still a simplification, but I think it’s a better simplification. It’s a simplification that allows us to talk concretely about a lot of the trickiness that comes up when balancing good and bad in a way that has the potential to lead to good solutions. In particular, it gives us a better way to talk about trade-off, diminishing returns, harm mitigation, context, and conflicting moral principles.

This post is part of a broader series of posts about how ideas from tech and AI apply to broader society, including The Power of High Speed Stupidity, Simulate the CEO, Artificial General Horsiness, and Let GPT be the Judge. If you like this stuff then I encourage you to subscribe, because I expect to write more posts on this theme.